Ensemble Learning approach in Data Mining

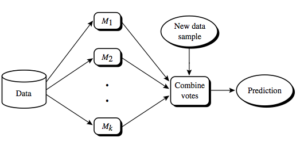

In our day to day life, when crucial decisions are made in a meeting, a voting among the members present in the meeting is conducted when the opinions of the members conflict with each other. This principle of “voting” can be applied to data mining also. In the voting scheme, when classifiers are combined, the class assigned to a test instance will be the one suggested by most of the base level classifiers involved in the ensemble.

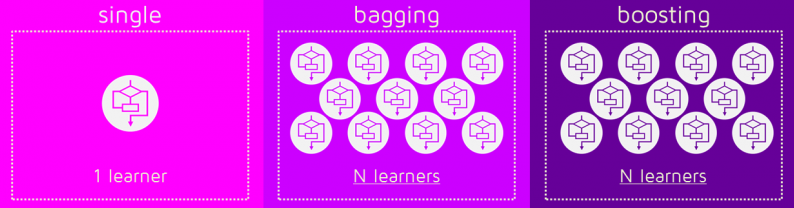

• Bagging and boosting are the variants of the voting schemes. bagging could be a voting theme within which n models, typically of the same kind, are created. For an unknown instance, every model’s predictions are recorded. That category is allotted that has the utmost vote among the predictions from models.

• Boosting is extremely like bagging within which solely the model construction part differs. Here the instances that are usually misclassified are allowed to participate in training a lot of variety of times. There can be n classifiers that themselves will have individual weights for his or her accuracies. Finally, that category is allotted that has the most weight. an example is AdaBoost algorithmic rule. bagging is best than boosting as boosting suffers from overfitting. Overfitting is that development wherever the model performs well just for the training knowledge. this is often as a result of it is aware of the coaching knowledge higher and it doesn’t grasp abundant concerning unknown knowledge.

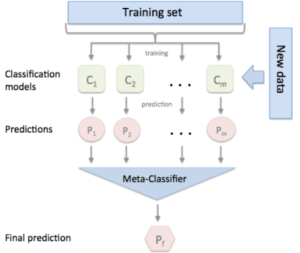

There is a pair of approaches for combining models. one in all them uses voting within which the category foretold by the majority of the models is chosen, whereas in stacking the predictions by every totally different model is given as input for a meta-level categorized whose output is that the final class. whether or not it is voting or stacking, there are 2 ways that of constructing an ensemble. They are homogenous ensemble wherever all classifiers are of the same kind and heterogeneous ensemble wherever the classifiers are totally different.

The basic difference between stacking and voting is that in voting no learning takes place at the meta level, as the final classification is decided by the majority of votes cast by the base level classifiers whereas in stacking learning takes place at the meta level.